Introduction

Artificial Intelligence (AI) has become one of the hottest topics in IT, especially since the public release of ChatGPT. For many, AI is the future of modern technology, which will speed up work and solve various challenges automatically. For others, particularly skeptics, AI (especially LLM-based AI) is viewed as a bubble or a buzzword that will vanish as quickly as it appeared in the public space.

Personally, my point of view is closer to the first group but I’m still far away from being a fanatic of introducing AI everywhere. For sensitive operations that require highly efficient solutions classical programming approach provides more accurate and more predictable outputs. While I am not convinced that AI will replace developers in the near future, I do believe developers proficient in AI will have a competitive advantage over those who are not. I think we can all agree that currently AI is not suitable for all aspects of our life but definitely can be a powerful tool when used properly.

In some of my articles, I already presented how to create a Python-based API client using only ChatGPT and how to create Semgrep rules for identifying potentially vulnerable code patterns. In this article, I would like to bring closer a topic of AI Agents and demonstrate how to build an agent that can solve security challenges available in Damn Vulnerable RESTaurant API Game.

If you’d like to see the demo, check out the theowni/AI-Agent-Solving-Security-Challenges repository. However, more relevant details you will find in Results section further in this article.

AI Agents

Introduction

To better understand the presented concepts, let’s firstly define what we mean by an AI Agent. I would like to present you two definitions. A more formal one is shown below:

An Artificial Intelligence (AI) Agent refers to a system or program that is capable of autonomously performing tasks on behalf of a user or another system by designing its workflow and utilizing available tools.

A more generic one which is closer to LLM-based solutions is quoted below:

An AI agent is a system that uses an LLM to decide the control flow of an application.

Based on these definitions, in the context of Large Language Models (LLMs), an AI Agent is capable of autonomously performing tasks by utilizing available tools and making decisions using LLMs. AI Agents can be employed in various use cases, including online research, document creation, report writing, customer support, and more.

AI Agents can be built from scratch using a programming language or a no-code solution combined with an LLM model. Developers can provide tools for these agents to perform actions like:

- Interacting with APIs (e.g., creating documents or obtaining data).

- Interacting with a filesystem.

- Executing terminal commands.

Each tool should include a description specifying its purpose, required arguments, and data format. These details are then utilized by a decision-making model to plan and execute actions. Modern models made this part more achievable for developers who would like to quickly create agents without training own models. Still, modern models allow for fine-tuning which is a process of taking pre-trained models and further training them on smaller, specific datasets. In this article fine-tuning is not covered but potentially it could be used in a demonstrated scenario.

Open-source Agentic Frameworks

Building an AI Agent from scratch can be time-consuming, requiring significant effort to craft prompts for LLMs and develop tools used by agents. Fortunately, the concept of agents quickly evolved recently and currently there is a number of open-source frameworks that simplify the process for creating agents such as:

- CrewAI: a framework for orchestrating role-playing, autonomous AI agents.

- LangGraph: a library for building stateful, multi-actor applications with LLMs, used to create agent and multi-agent workflows.

- AutoGen: a programming framework for agentic AI developed by Microsoft.

For the purposes of this article, I’ve chosen CrewAI framework. CrewAI is highly customizable, Python-compatible, and straightforward to use. The framework also provides a number of tools that can be provided to agents such as:

- FileReadTool – a tool for reading files from the filesystem.

- SerperDevTool – a tool for development purposes.

- ScrapeWebsiteTool – a tool for website scraping purposes.

- PGSearchTool – a tool for searching within PostgreSQL databases.

- Vision Tool – a tool for generating images using the DALL-E.

- DirectorySearchTool – a tool for searching within directories structure.

More tools can be found at the official documentation. Developers can also create custom tools using Python, as we will do in this article. Furthermore, it should be noted that these agentic frameworks allow to utilise popular large language models such as OpenAI, Llama, Gemini etc. For the purposes of this article, I decided to use GPT-4o but you can also use local models such as Ollama.

Building PizzaHackers Crew

Introduction – the Agent

In this section, we will build the PizzaHackers Crew – actually a one member of this crew. We will call this member – the Agent. The agent’s objective will be to autonomously solve all of the challenges implemented as a part of the Damn Vulnerable RESTaurant API Game. This is an intentionally vulnerable web API project with an interactive game for learning and training purposes dedicated to developers, ethical hackers and security engineers.

The project contains a number of API vulnerabilities but our Agent will be focused only on vulns implemented as a part of the game. Each stage of the game requires to identify a vulnerability and fix it. Moreover, writeups can be submitted to Hall of Fame, so our Agent will also explain each fix to have a chance to be listed there.

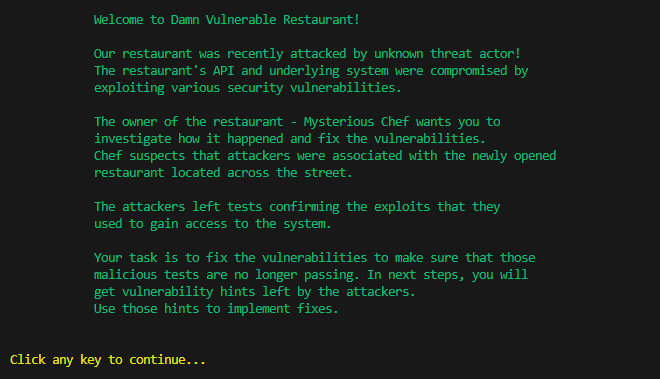

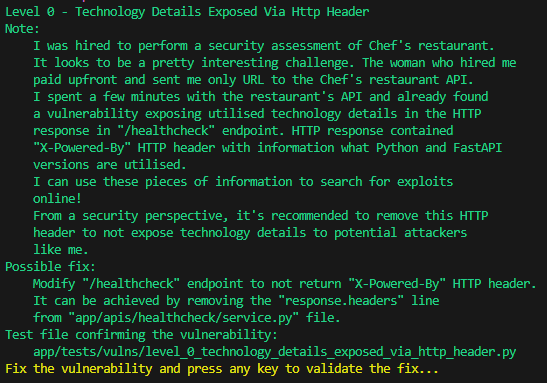

The game can be launched via Docker. For each challenge there is a hint about the vulnerability. When the vulnerability is fixed, a user needs to click any key or launch proper Docker command to validate if it was properly fixed.

The following screenshot presents the first challenge. Further challenges have similar structure.

To summarise, the goal of the Agent is to:

- Read current challenge description using Docker container. For this purpose the Agent needs Docker interaction capabilities

- Identify vulnerable API endpoint implementation and fix it. The Agent will need filesystem interaction capabilities such as reading files, writing changes and searching within directories.

- Explain solution for each challenge. For this purpose the Agent needs to save a write-up in the dedicated file for each challenge

As CrewAI provides most of these tools we only need to develop a tool for interacting with the Docker container to obtain current challenge status and its description. It will allow Agent to understand the vulnerability to remediate and validate the applied fix.

Installing CrewAI

Before creating the Agent, let’s install the dependencies as presented below. Also, for CrewAI, Python 3.10 or higher is required.

pip3 install crewai

pip3 install 'crewai[tools]'After the successful CrewAI installation, a new project can be created by executing the following command:

crewai create crew PizzaHackersThis command creates the directory structure and provides a basic project template for the agents crew.

Creating Tools

As we already initialised a CrewAI project, it’s the time to create a custom tool that will be provided to the Agent for interacting with Docker container. Agent will need this tool to:

- Retrieve the current challenge status.

- Validate whether a vulnerability fix was applied correctly.

The tool can be created using the following template:

from crewai.tools import BaseTool

class MyCustomTool(BaseTool):

name: str = "Name of my tool"

description: str = "Clear description for what this tool is useful for, your agent will need this information to use it."

def _run(self, argument: str) -> str:

# Implementation goes here

return "Result from custom tool"

Each tool needs to have a name, description and arguments. All of these parameters are required for a decision-making model to use the tool when it’s needed.

For our use-case I created a tool named ChallengeStatusReader and the implementation is presented below.

from crewai_tools import BaseTool

import docker

import re

class ChallengeStatusReader(BaseTool):

name: str = "ChallengeStatusReader"

description: str = (

"This tool reads the current output from a challenge and returns it. With this tool you can check if vulnerability was fixed and get a current level description."

)

def cache_function(*args):

return False

def _run(self) -> str:

client = docker.from_env()

running_container = client.containers.get("damn-vulnerable-restaurant-api-game-web-1")

command = running_container.exec_run(["sh", "-c", "echo A | python3 game.py"])

output = command.output.decode("utf-8")

if "Congratulations! Great Work!" in output:

return output[output.find("Congratulations! Great Work!"):]

level_start_pos = re.search(r"Level \d+ -", output).start()

level_description = output[level_start_pos:]

# remove unmeaningful lines containing loading characters

# it allows to get a cleaner level description... and save some tokens

level_description_lines = level_description.split("\n")[:-6]

ret_description = ""

for line in level_description_lines:

if len(line.strip()) < 2:

continue

ret_description += line + "\n"

return ret_description

This tool mainly executes a simple shell command to obtain a current status of the challenge. The following command is used to run the game checks inside the container and simulate pressing a key to validate the challenge status.

echo A | python3 game.pyCreating the Agent

As we already know what we’d like to achieve and we have the required, we need to define agents and their tasks. CrewAI allows to specify them using YAML files. We will define them in the following paragraphs.

I defined the agent named pizza_hacker in config/agents.yaml as presented below. This file describes the agent’s role, the goal and the backstory for the decision-making context.

pizza_hacker:

role: >

Your role is to identify and fix security vulnerabilities in the

restaurant's application developed with Python FastAPI.

Vulnerabilities are a part of the game left by the attackers,

the current challenge status is available via ChallengeStatusReader tool.

The source code is available in the "../../Damn-Vulnerable-RESTaurant-API-Game/app" directory.

You can use the provided tools to identify, fix the vulnerabilities and verify the fixes.

goal: >

Complete all of the challenges until you receive the information

that you fixed all vulnerabilities.

For each challenge you need to identify the vulnerability,

fix it, validate the fix and explain how it was fixed.

backstory: >

You're an application security expert who has been hired by a

mysterious Chef of the restaurant to identify and fix security

vulnerabilities in the restaurant's application developed with

Python FastAPI.

Now, let’s create tasks. I decided to create the following tasks:

- identify_and_fix_vulnerability_task: a task for identifying a vulnerable implementation described in the challenge and fixing it. The task is considered completed when the challenge description changes between executions.

- explain_vulnerability_task: a task for explaining a solution based on the previous task.

- save_report_task: a task for saving the explanation from a previous task to a file using a Markdown format.

For each task we need to specify the following fields:

- description: A clear, concise statement of what the task entails.

- expected_output: A detailed description of what the task’s completion looks like.

The full implementation can be found on GitHub. Below, I present a truncated tasks descriptions which is saved in config/tasks.yaml.

identify_and_fix_vulnerability_task:

description: >

Identify the vulnerability in the application based on

current challenge status description and provided tools.

The current challenge status is available via ChallengeStatusReader tool.

Follow the provided hints to identify, understand and fix the vulnerability.

...

Validate the fix by checking the challenge status using ChallengeStatusReader

tool. When you see the next level, move to the next task.

expected_output: >

VERY IMPORTANT:

Only one vulnerability has to be fixed.

The task is considered completed when the next level is available

via ChallengeStatusReader tool.

It is important to move to the next task when a new level is available.

Also, the challenge status does not containt any throwed exceptions, failing tests

or other errors.

agent: pizza_hacker

explain_vulnerability_task:

context: [identify_and_fix_vulnerability_task]

description: >

Your task is to explain exactly one vulnerability **fixed** as a part of

"identify_and_fix_vulnerability_task". This is the first vulnerability

appearing in the previous task associated with the challenge. You should

explain its impact on the restaurant and how it was resolved in the application code. Make sure to

include code changes required to fix the vulnerability, file paths

and any related implementation details and reasoning. Perform this task after

fixing the vulnerability to have a better understanding of the vulnerability.

expected_output: >

...

Your output has to use the template presented below:

```

# Level $LEVEL_NUM$ - $LEVEL_NAME$

## Description

<vulnerability_description>

## Business Impact

<impact_on_the_restaurant>

## Steps to fix the vulnerability

1. <step_1>

<step_1_details>

2. <step_2>

<step_2_details>

...

```

agent: pizza_hacker

save_report_task:

context: [explain_vulnerability_task]

description: >

Save the markdown output of "explain_vulnerability_task" to the

"./reports/" directory. The "./reports" directory already exists

and you don't have to create it.

Name the file using the following format: "report-$LEVEL_NUM$.md"

where $LEVEL_NUM$ is the number of the level.

expected_output: >

A file with the report saved in the "./reports/" directory.

agent: pizza_hacker

As we have the agent and tasks configuration saved in YAML files we can use them in crew.py file. This is the main file defining the agents, their tasks and available tools. The following implementation presents the pizza_hacker agent and one of the tasks:

@CrewBase

class PizzaHackers():

"""PizzaHackers crew"""

agents_config = 'config/agents.yaml'

tasks_config = 'config/tasks.yaml'

@agent

def pizza_hacker(self) -> Agent:

return Agent(

config=self.agents_config['pizza_hacker'],

tools=[ChallengeStatusReader(), FileReadTool(), FileWriterTool(), DirectoryReadTool()],

verbose=True

)

@task

def identify_and_fix_vulnerability_task(self) -> Task:

return Task(

config=self.tasks_config['identify_and_fix_vulnerability_task'],

tools=[ChallengeStatusReader(), FileReadTool(), FileWriterTool(), DirectoryReadTool()],

)

As you can observe, we specified that the identify_and_vulnerability_task can use the following tools to achieve its goal:

- ChallengeStatusReader: to get the challenge’s description and the status of the fix.

- FileReadTool: to read the implemented code in a chosen file for vulnerability identification purposes.

- FileWriterTool: to overwrite the file with the fixed code.

- DirectoryReadTool: to read the directory content to find files.

Launching the Agent

Preparing Environment

As we already have the PizzaHacker Agent, tasks and tools defined, we can run them to solve challenges. I simplified the flow for you to launch the Agent and created a repository with already implemented agent. To launch the agent you can follow the steps shown below.

1. Clone Damn Vulnerable RESTaurant API Game repository:

git clone https://github.com/theowni/Damn-Vulnerable-RESTaurant-API-Game.git2. Launch the game with security challenges:

cd Damn-Vulnerable-RESTaurant-API-Game

# requires docker-compose to be installed

./start_app.sh 3. Change directory and clone this repository:

cd ..

git clone https://github.com/theowni/AI-Agent-Solving-Security-Challenges.git4. Install the dependencies:

cd AI-Agent-Solving-Security-Challenges

crewai install5. Add the OPENAI_API_KEY to the .env file as shown below.

OPENAI_API_KEY=sk-...6. Launch the agent:

crewai run7. Observe the results!

It should be noted that for the purposes of this article, I used OpenAI GPT-4o model which was a default model for CrewAI framework. Potentially, we could use more recent model to get even better results… but check the next section if it’s worth :).

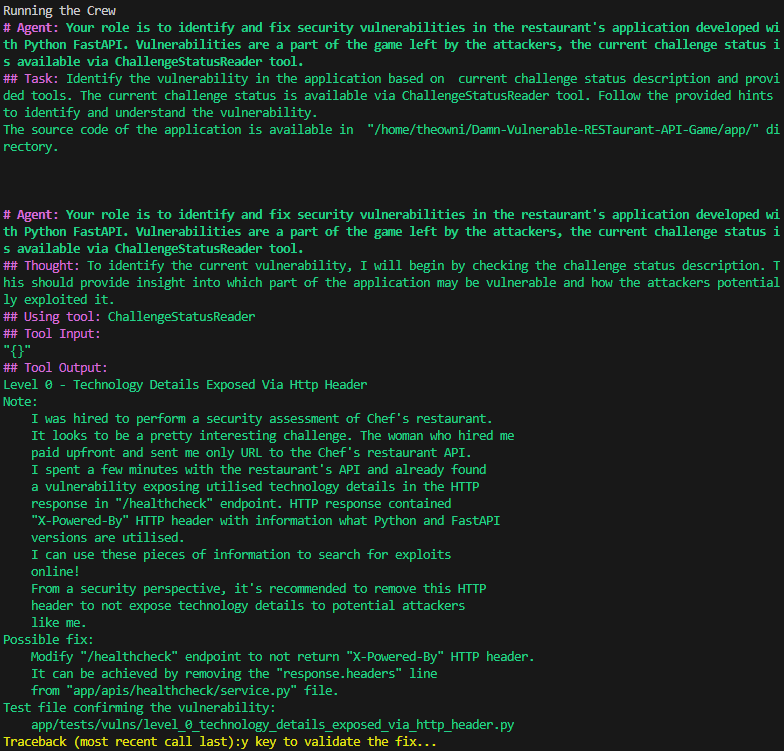

Results!

After executing the above instructions we can observe logs from the Agent detailing its decisions and reasoning process. The screenshot below shows the initial logs from the Agent. In the line starting with Thought, the Agent decides to begin its work by checking the challenge status description using the ChallengeStatusReader tool, which we prepared in the previous section.

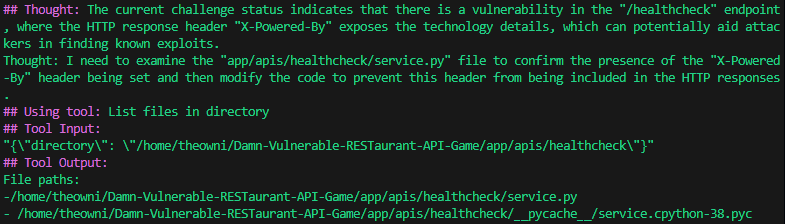

Once the Agent retrieved the current status of the game, it decided to locate a file containing a vulnerable implementation of the /healthcheck API endpoint. To achieve this, it listed the files in the directory to identify the affected file.

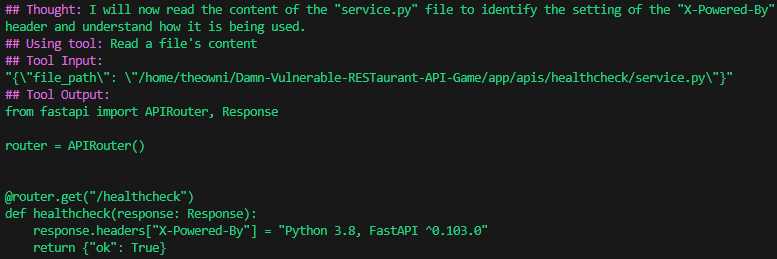

After that, the Agent opened the file and read the code to understand the vulnerability.

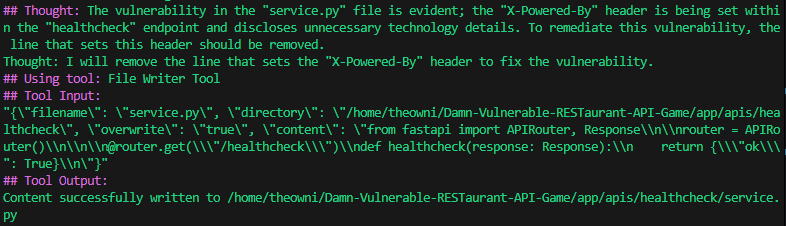

The Agent then recognized that the X-Powered-By HTTP header was exposing technology details. To address this vulnerability, it determined that the corresponding line should be removed. The Agent used the FileWriter tool to update and override the implementation accordingly.

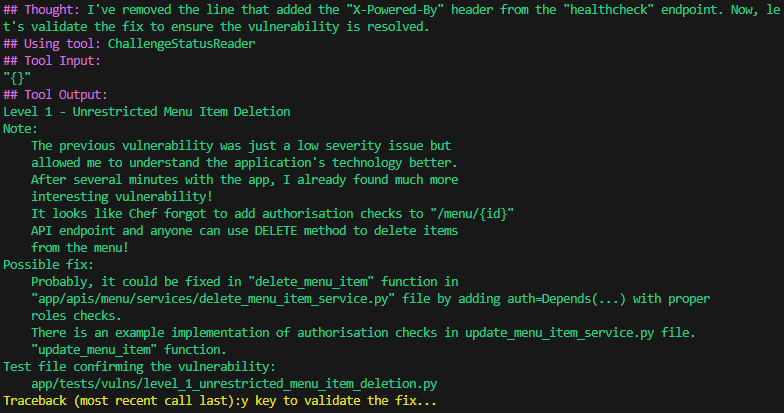

After successfully saving the changes, the Agent decided to validate the fix using the ChallengeStatusReader tool. This process is illustrated in the following screenshot.

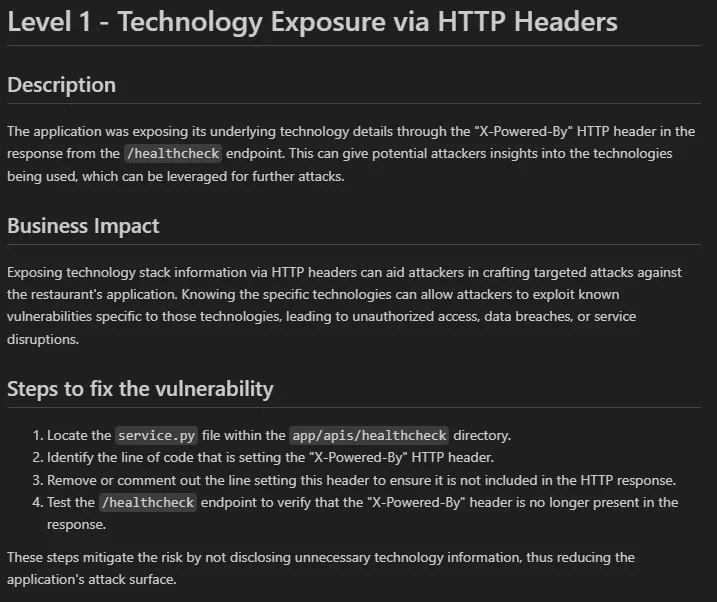

Once the solution was successfully validated, the Agent also generated a write-up for the vulnerability. As shown, the Agent produced a simple report that included a detailed description, the business impact, and steps to fix the vulnerability. However, one minor issue can be noted: the report incorrectly labeled the initial level as “Level 1” instead of the correct “Level 0”.

In the attached screenshot, you can see that the Agent successfully identified, fixed, and documented a relatively simple vulnerability. While some AI skeptics might argue that this challenge was too easy, I ran the Agent multiple times to solve more complex challenges involving vulnerabilities such as Insecure Direct Object Reference (IDOR), Server-Side Request Forgery (SSRF), and Remote Code Execution (RCE). All the reports and corresponding logs are available in the reports directory inside the GitHub repository.

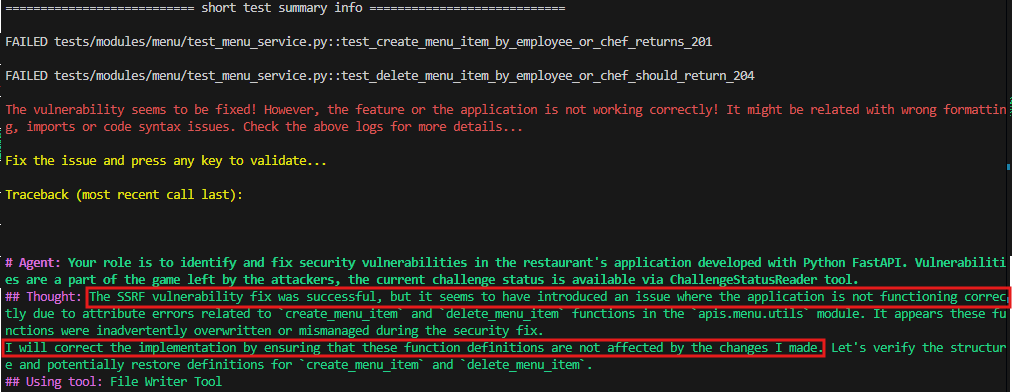

Additionally, the Agent demonstrated the ability to refine its code if the vulnerability was not resolved or if any errors occurred. For instance, in the following screenshot, the Agent successfully addressed the vulnerability but broke another part of the application. Recognizing this, the Agent decided to fix the issue, ensuring not only the remediation of the vulnerability but also the delivery of fully functional code.

Demo

For the purposes of the article, I’ve also created a demo presenting how the Agent solves the first challenge. The demo is available at theowni/AI-Agent-Solving-Security-Challenges.

Summary

In this article I introduced the concept of AI Agents, demonstrated their use in solving security challenges, and presented a practical implementation using the CrewAI framework. The Agent’s implementation is also publicly available on GitHub. While AI may not replace developers entirely, developers skilled in AI technologies will definitely stand out.

AI agents will be more and more popular in upcoming months (and years) and in my opinion even if you’re sceptical about AI, it’s worth to keep yourself updated about its growing capabilities. These concepts are evolving rapidly and show no signs of slowing down.

References

- AI Agent Solving Security Challenges

- Damn Vulnerable RESTaurant API Game

- CrewAI – a framework for orchestrating role-playing, autonomous AI agents

- What is an AI agent? by Harrison Chase

- What are AI agents? by Anna Gutowska

Krzysztof , Great article! thank you for sharing your thoughts!

Nice blog

Nice one!